Over 16,535,284 people are on fubar.

What are you waiting for?

Thanks to a new technique, DNA strands can be easily converted into tiny fibre optic cables that guide light along their length. Optical fibres made this way could be important in optical computers, which use light rather then electricity to perform calculations, or in artificial photosynthesis systems that may replace today's solar panels.

Both kinds of device need small-scale light-carrying "wires" that pipe photons to where they are needed. Now Bo Albinsson and his colleagues at Chalmers University of Technology in Gothenburg, Sweden, have worked out how to make them. The wires build themselves from a mixture of DNA and molecules called chromophores that can absorb and pass on light.

The result is similar to natural photonic wires found inside organisms like algae, where they are used to transport photons to parts of a cell where their energy can be tapped. In these wires, chromophores are lined up in chains to channel photons.

Light wire

Albinsson's team used a single type of chromophore called YO as their energy mediator. It has a strong affinity for DNA molecules and readily wedges itself between the "rungs" of bases that make up a DNA strand. The result is strands of DNA with YO chromophores along their length, transforming the strands into photonic wires just a few nanometres in diameter and 20 nanometres long. That's the right scale to function as interconnects in microchips, says Albinsson.

To prove this was happening, the team made DNA strands with an "input" molecule on one end to absorb light, and on the other end a molecule that emits light when it receives it from a neighbouring molecule. When the team shone UV light on a collection of the DNA strands after they had been treated with YO, the finished wires transmitted around 30% of the light received by the input molecule along to the emitting molecule.

Physicists have corralled chromophores for their own purposes in the past, but had to use a "tedious" and complex technique that chemically attaches them to a DNA scaffold, says Niek van Hulst, at the Institute of Photonic Sciences in Barcelona, Spain, who was not involved in the work.

The Gothenburg group's ready-mix approach gets comparable results, says Albinsson. Because his wires assemble themselves, he says they are better than wires made by the previous chemical method as they can self-repair: if a chromophore is damaged and falls free of the DNA strand, another will readily take its place. It should be possible to transfer information along the strands encoded in pulses of light, he told New Scientist.

Variable results

Philip Tinnefeld at the Ludwig Maximilian University of Munich in Germany says a price has been paid for the added simplicity.

Because the wire is self-assembled, he says, it's not clear exactly where the chromophores lie along the DNA strand. They are unlikely to be spread out evenly and the variation between strands could be large.

Van Hulst agrees and is investigating whether synthetic molecules made from scratch can be more efficient than modified DNA.

But both researchers think that with improvements, "molecular photonics" could have a wide range of applications, from photonic circuitry in molecular computers to light harvesting in artificial photosynthetic systems.

Journal reference: Journal of the American Chemical Society (DOI: 10.1021/ja803407t)

Thanks to a new technique, DNA strands can be easily converted into tiny fibre optic cables that guide light along their length. Optical fibres made this way could be important in optical computers, which use light rather then electricity to perform calculations, or in artificial photosynthesis systems that may replace today's solar panels.

Both kinds of device need small-scale light-carrying "wires" that pipe photons to where they are needed. Now Bo Albinsson and his colleagues at Chalmers University of Technology in Gothenburg, Sweden, have worked out how to make them. The wires build themselves from a mixture of DNA and molecules called chromophores that can absorb and pass on light.

The result is similar to natural photonic wires found inside organisms like algae, where they are used to transport photons to parts of a cell where their energy can be tapped. In these wires, chromophores are lined up in chains to channel photons.

Light wire

Albinsson's team used a single type of chromophore called YO as their energy mediator. It has a strong affinity for DNA molecules and readily wedges itself between the "rungs" of bases that make up a DNA strand. The result is strands of DNA with YO chromophores along their length, transforming the strands into photonic wires just a few nanometres in diameter and 20 nanometres long. That's the right scale to function as interconnects in microchips, says Albinsson.

To prove this was happening, the team made DNA strands with an "input" molecule on one end to absorb light, and on the other end a molecule that emits light when it receives it from a neighbouring molecule. When the team shone UV light on a collection of the DNA strands after they had been treated with YO, the finished wires transmitted around 30% of the light received by the input molecule along to the emitting molecule.

Physicists have corralled chromophores for their own purposes in the past, but had to use a "tedious" and complex technique that chemically attaches them to a DNA scaffold, says Niek van Hulst, at the Institute of Photonic Sciences in Barcelona, Spain, who was not involved in the work.

The Gothenburg group's ready-mix approach gets comparable results, says Albinsson. Because his wires assemble themselves, he says they are better than wires made by the previous chemical method as they can self-repair: if a chromophore is damaged and falls free of the DNA strand, another will readily take its place. It should be possible to transfer information along the strands encoded in pulses of light, he told New Scientist.

Variable results

Philip Tinnefeld at the Ludwig Maximilian University of Munich in Germany says a price has been paid for the added simplicity.

Because the wire is self-assembled, he says, it's not clear exactly where the chromophores lie along the DNA strand. They are unlikely to be spread out evenly and the variation between strands could be large.

Van Hulst agrees and is investigating whether synthetic molecules made from scratch can be more efficient than modified DNA.

But both researchers think that with improvements, "molecular photonics" could have a wide range of applications, from photonic circuitry in molecular computers to light harvesting in artificial photosynthetic systems.

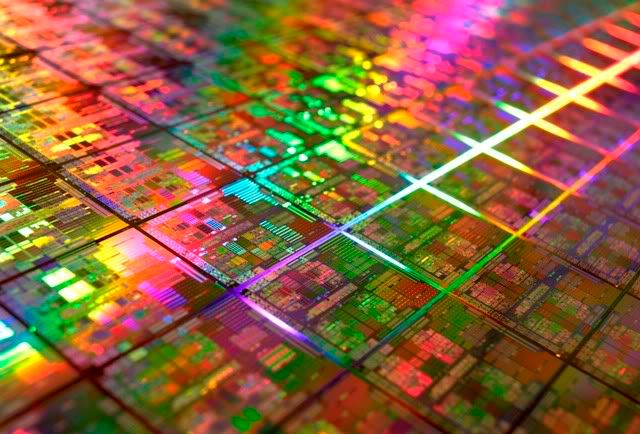

Journal reference: Journal of the American Chemical Society (DOI: 10.1021/ja803407t) Amd_wafer_2

AMD is hitting new heights of achievement but that's still not enough to keep it from getting smoked by a much faster rival.

The company is set to launch on Thursday its much-awaited 45-nanometer quad core processor for servers, though the release comes months after its rival Intel put out a comparable product. Codenamed Shanghai, this is AMD's first processor to use the smaller, faster 45-nm technology instead of older 65nm technology.

Meanwhile, Intel is planning to release its latest 45-nm chips for the desktop on Monday, codenamed Nehalem and to be known officially as Core i7. AMD says it won't have a comparable desktop chip until next year.

In the carefully-orchestrated roadmaps used by semiconductor companies, chips intended for use in servers typically precede desktop and notebook processors by several months.

"I think of Shanghai as the last dance of the company," says Patrick Wang, an analyst with brokerage and research firm Wedbush Morgan. "Shanghai is significant because AMD needs it to get back into the game."

For now, all eyes are on the launch of the Shanghai chips from AMD. The chips mark AMD's debut in the 45-nanometer process technology and are seen as a bid to move forward after the disastrous performance of its previous Barcelona chips, which were 65-nm quad-core processors.

Barcelona was widely faulted for its technical glitches that led to multiple delays in its launch and its high pricing. The combination, some say, helped Intel gain market share at AMD's expense.

The latest 45-nm quad core Opteron processor will have increased power efficiency, fit easily into the same socket as Barcelona allowing for "non-disruptive" upgrades and is priced competitively, says Brent Kerby, senior product marketing manager for server and workstations for AMD.

"Shanghai is looking really good and we delivered it three months ahead of our planned schedule," he says.

AMD's new chip seems impressive, say analysts, and would be groundbreaking except for the fact that Intel has had similar chips in the market for months. Intel's quad-core 45-nm server processor, called Harpertown (and officially known as Xeon) was available around the same time as AMD launched its 65-nm processor Barcelona.

"Barcelona was completely botched in terms of execution and was failure on many fronts--technology, pricing and market share," says Wang. "The reason that AMD is in such dire financial situation is because of the Barcelona."

Now Shanghai, hopes AMD, will change all that.

"With Barcelona we had a completely new redesign," says AMD's Kerby. "We have taken on the learnings and capabilities from Barcelona and improved on it for Shanghai."

Amd_roadmap_2_2 AMD is also at least six months behind Intel when it comes to six-core processors, says PC analyst Shane Rau with research firm IDC. AMD plans to introduce a six-core processor called Istanbul mid-2009.

But Intel already has its six-core chip called Dunnington available for the last few weeks. Still AMD has some breathing space there. Just about 5% of Intel's shipments in the third quarter were Dunnington, giving AMD some time to catch up.

Shanghai may have helped AMD move closer to Intel in terms of comparable technology for server processors. But on the desktop side, the company still has an uphill climb.

AMD's 45-nm desktop chip, codenamed Deneb, is likely to launch early next year. That means Intel's core i7 processors will have a comfortable lead over its rival.

"Intel's going to be the only game in town for a while for the latest in desktop processors," says Wang.

With AMD and Intel locked in yet another fierce battle, here's a breakdown of how the two company's latest releases stack up.

AMD Learns Its Lessons With Shanghai

AMD says it did the "heavy lifting" for Barcelona and has since streamlined its processes to put out a next generation processor faster.

Its latest 45-nm quad-core processors offers significantly higher CPU clock frequencies with the same power consumption as earlier generations.

"What these specs mean is it will be a higher performing processor and offer better price performance per watt," says Rau.

Shanghai's compatibility with sockets designed for Barcelona means OEMs can buy it and drop it in to their existing designs for servers and motherboards. That helps reduce costs for them and makes it easier to upgrade, says Rau.

The chips also increases the size of the Level 3 cache by 200%, to 6 MB, which helps speed memory-intensive applications like virtualization, databases and Java apps, says AMD.

The processors also draw up to 35% less power at idle compared to the previous generation while delivering up to 35% more performance, says the company.

"AMD is going to be successful in applications that are memory and floating point intensive, which means in databases and scientific applications," says Wang.

Intel Races Ahead to Desktops

AT A GLANCE: Intel core i7

Faster Processor: Almost four to six times faster than Intel's current platform.

Greater power efficiency: Allows the processor to switch off power to an idle or unused core.

Integrated memory controller: Increases bandwidth directly available to the processor, reducing lag time before a CPU can begin executing the next instruction.

Simultaneous multi-threading: Used in some Pentium and Xeon processors it makes a comeback. Allows for double the number of threads to be run simultaneously by each processor boosting performance

The first three Core i7 chips will be quad-core and have clock speeds of 2.66GHz, 2.93GHz, and 3.20GHz and integrated memory controller.

Codenamed Bloomfield and officially named Core i7, Intel's 45-nm desktop processors are targeted at largely at gaming PCs but Intel plans to have versions ready for business users in the next few weeks.

The 65-nm vs. 45-nm difference is important because on a macro-level it is one of the factors that affects pricing, say analysts.

"When Intel can manufacture in 45-nm earlier than AMD it can possibly have a cost advantage, which can be passed on to users," says Rau. "A 65-nm die is more expensive to cast than a 45-nm one."

For Intel, that means more than just being a generation ahead of AMD: It means that Intel will be enjoying fatter margins while AMD is still struggling to catch up. In the end, that could translate into enough market share to cripple AMD for good

Amd_wafer_2

AMD is hitting new heights of achievement but that's still not enough to keep it from getting smoked by a much faster rival.

The company is set to launch on Thursday its much-awaited 45-nanometer quad core processor for servers, though the release comes months after its rival Intel put out a comparable product. Codenamed Shanghai, this is AMD's first processor to use the smaller, faster 45-nm technology instead of older 65nm technology.

Meanwhile, Intel is planning to release its latest 45-nm chips for the desktop on Monday, codenamed Nehalem and to be known officially as Core i7. AMD says it won't have a comparable desktop chip until next year.

In the carefully-orchestrated roadmaps used by semiconductor companies, chips intended for use in servers typically precede desktop and notebook processors by several months.

"I think of Shanghai as the last dance of the company," says Patrick Wang, an analyst with brokerage and research firm Wedbush Morgan. "Shanghai is significant because AMD needs it to get back into the game."

For now, all eyes are on the launch of the Shanghai chips from AMD. The chips mark AMD's debut in the 45-nanometer process technology and are seen as a bid to move forward after the disastrous performance of its previous Barcelona chips, which were 65-nm quad-core processors.

Barcelona was widely faulted for its technical glitches that led to multiple delays in its launch and its high pricing. The combination, some say, helped Intel gain market share at AMD's expense.

The latest 45-nm quad core Opteron processor will have increased power efficiency, fit easily into the same socket as Barcelona allowing for "non-disruptive" upgrades and is priced competitively, says Brent Kerby, senior product marketing manager for server and workstations for AMD.

"Shanghai is looking really good and we delivered it three months ahead of our planned schedule," he says.

AMD's new chip seems impressive, say analysts, and would be groundbreaking except for the fact that Intel has had similar chips in the market for months. Intel's quad-core 45-nm server processor, called Harpertown (and officially known as Xeon) was available around the same time as AMD launched its 65-nm processor Barcelona.

"Barcelona was completely botched in terms of execution and was failure on many fronts--technology, pricing and market share," says Wang. "The reason that AMD is in such dire financial situation is because of the Barcelona."

Now Shanghai, hopes AMD, will change all that.

"With Barcelona we had a completely new redesign," says AMD's Kerby. "We have taken on the learnings and capabilities from Barcelona and improved on it for Shanghai."

Amd_roadmap_2_2 AMD is also at least six months behind Intel when it comes to six-core processors, says PC analyst Shane Rau with research firm IDC. AMD plans to introduce a six-core processor called Istanbul mid-2009.

But Intel already has its six-core chip called Dunnington available for the last few weeks. Still AMD has some breathing space there. Just about 5% of Intel's shipments in the third quarter were Dunnington, giving AMD some time to catch up.

Shanghai may have helped AMD move closer to Intel in terms of comparable technology for server processors. But on the desktop side, the company still has an uphill climb.

AMD's 45-nm desktop chip, codenamed Deneb, is likely to launch early next year. That means Intel's core i7 processors will have a comfortable lead over its rival.

"Intel's going to be the only game in town for a while for the latest in desktop processors," says Wang.

With AMD and Intel locked in yet another fierce battle, here's a breakdown of how the two company's latest releases stack up.

AMD Learns Its Lessons With Shanghai

AMD says it did the "heavy lifting" for Barcelona and has since streamlined its processes to put out a next generation processor faster.

Its latest 45-nm quad-core processors offers significantly higher CPU clock frequencies with the same power consumption as earlier generations.

"What these specs mean is it will be a higher performing processor and offer better price performance per watt," says Rau.

Shanghai's compatibility with sockets designed for Barcelona means OEMs can buy it and drop it in to their existing designs for servers and motherboards. That helps reduce costs for them and makes it easier to upgrade, says Rau.

The chips also increases the size of the Level 3 cache by 200%, to 6 MB, which helps speed memory-intensive applications like virtualization, databases and Java apps, says AMD.

The processors also draw up to 35% less power at idle compared to the previous generation while delivering up to 35% more performance, says the company.

"AMD is going to be successful in applications that are memory and floating point intensive, which means in databases and scientific applications," says Wang.

Intel Races Ahead to Desktops

AT A GLANCE: Intel core i7

Faster Processor: Almost four to six times faster than Intel's current platform.

Greater power efficiency: Allows the processor to switch off power to an idle or unused core.

Integrated memory controller: Increases bandwidth directly available to the processor, reducing lag time before a CPU can begin executing the next instruction.

Simultaneous multi-threading: Used in some Pentium and Xeon processors it makes a comeback. Allows for double the number of threads to be run simultaneously by each processor boosting performance

The first three Core i7 chips will be quad-core and have clock speeds of 2.66GHz, 2.93GHz, and 3.20GHz and integrated memory controller.

Codenamed Bloomfield and officially named Core i7, Intel's 45-nm desktop processors are targeted at largely at gaming PCs but Intel plans to have versions ready for business users in the next few weeks.

The 65-nm vs. 45-nm difference is important because on a macro-level it is one of the factors that affects pricing, say analysts.

"When Intel can manufacture in 45-nm earlier than AMD it can possibly have a cost advantage, which can be passed on to users," says Rau. "A 65-nm die is more expensive to cast than a 45-nm one."

For Intel, that means more than just being a generation ahead of AMD: It means that Intel will be enjoying fatter margins while AMD is still struggling to catch up. In the end, that could translate into enough market share to cripple AMD for good One of the first things that caught my attention when pictures of the upcoming Samsung NC10 netbook began to surface was its keyboard. While many netbooks attempt to save space by consolidating some keys and shrinking some others, the NC10’s 84 key keyboard doesn’t seem to make many compromises.

Unlike the Asus Eee PC, the NC10 positions the right shift key above the arrow keys, not to the right of them, making it easy for touch-typists to find. And by dropping the arrow keys a bit below the rest of the keyboard, there’s even room for dedicated page up and down buttons. Many other netbooks require you to hold down the Fn key while hitting the arrow buttons to access the page up and down features.

French site Blogeee scored some new high resolution images of the Samsung NC10, and I have to say it’s looking good. Blogeee also uncovered a few new details about the netbook, like the fact that the computer will support an external display with a maximum resolution up to 2048 x 1536 and 85Hz, has HD audio, and a 1.3MP camera.

We’ve also now got some dimensions to go with the Samsung NC10: 261mm x 185mm x 30mm or about 10.3″ x 7.3″ x 1.2″. The netbook weighs 1.33kg or about 2.9 pounds.

One of the first things that caught my attention when pictures of the upcoming Samsung NC10 netbook began to surface was its keyboard. While many netbooks attempt to save space by consolidating some keys and shrinking some others, the NC10’s 84 key keyboard doesn’t seem to make many compromises.

Unlike the Asus Eee PC, the NC10 positions the right shift key above the arrow keys, not to the right of them, making it easy for touch-typists to find. And by dropping the arrow keys a bit below the rest of the keyboard, there’s even room for dedicated page up and down buttons. Many other netbooks require you to hold down the Fn key while hitting the arrow buttons to access the page up and down features.

French site Blogeee scored some new high resolution images of the Samsung NC10, and I have to say it’s looking good. Blogeee also uncovered a few new details about the netbook, like the fact that the computer will support an external display with a maximum resolution up to 2048 x 1536 and 85Hz, has HD audio, and a 1.3MP camera.

We’ve also now got some dimensions to go with the Samsung NC10: 261mm x 185mm x 30mm or about 10.3″ x 7.3″ x 1.2″. The netbook weighs 1.33kg or about 2.9 pounds.